In this last post of the series, I discuss the use of JSON based Ajax calls and client side View Models. I will propose also a simple implementation of a knockout.js binding to apply a generic jQuery plug-in to an Html node. The post is concluded with a short analysis of Single Page Application frameworks.

In my previous post we have seen that Html returning Ajax calls update the needed parts of an Html page while keeping unmodified the remainder of the page. This allow a tighter interaction between user and server because the user may work on other areas of the page while waiting for a server response, and he/she may ask supplementary information to the server when he/she is in the middle of a task without loosing the whole page state.

The user experience may be improved further if we are able to maintain the whole state of the current task on the client, because this way we reduce further the need to communicate with the server: the user may prepare all data for the server while receiving immediately all needed help and suggestions with no need to communicate with the server in this first stage. Communication with the server is needed only after everything has been prepared. For instance, the user may modify all data contained in a grid, recurring to a detail window when needed. Entities connected with one-to-many relations with the main list may be edited in the detail view. Everything without communicating with the server! Then, when all changes have been done, the user performs a single submit, and updates the global state of the system. The server answer may contain corrections performed by the server to the data supplied by the user, that are automatically applied to the client copy of the data.

In other words maintaining the whole state of a task on the client side allows a tighter user-machine cooperation since this cooperation may be performed without waiting for remote server answers. However, the increased complexity of the client side requires a robust and modular architecture of the client code. In particular, since we move logics, and not only UI on the client side, Html nodes that are mainly UI staffs must be supported by JavaScript models. Models and Html nodes should cooperate while keeping separation of concerns between logics and UI. This means that all processing must take pace on models that are then rendered by using client side templates. Accordingly, Ajax calls can’t return Html anymore, but must return JavaScript models.

Summing up, all architectures where the whole state of the current task is maintained on the client should have the following features:

- JSON communication with the server. The format of the data exchanged between server and client might be also Xml based, but as a matter of fact at the moment, the simpler JSON protocol is a kind of standard.

- Html is created dynamically by instantiating client templates, thus this kind of Web Applications are not visible to search engines.

- The state of client and server must be kept aligned, by performing simultaneous updates on both client and server in a transactional fashion. This means, for instance, that if a server update fails for some reason the client must be able to restore the state of the last client server synchronization.

As a matter of fact at the moment point 3 has not received the needed attention also in sophisticated Single Page Application frameworks, that don’t supply general tools to face it, so the problem is substantially left to custom solutions of the developers.

In the case of Html based Ajax communication we have seen that, since the communication is substantially based on form submits, the server relies on all input fields having adequate names to build a model that then is passed to the Action methods that serve the client requests. In JSON based commutations, instead, input fields names are completely irrelevant since action methods receive substantially JavaScript models.

Html Ids, and CSS classes are also used as “addresses” to select Html nodes to enhance with JavaScript code. Several frameworks like knockout.js and angular.js avoid the use of these ids and CSS classes as a way to attach JavaScript behavior to Html nodes. In their case, model properties are “connected” to Html nodes through the so called bindings that are substantially communication channels between Html nodes and the JavaScript properties that updates one of them when the other changes. They may be one-way or two ways. Bindings may also connect Html nodes with JavaScript functions, and the developer may also define custom bindings, thus bindings solve completely the problem of connecting Html nodes with JavaScript code with no need to provide unique ids, or selection-purpose CSS classes.

Below how to use a custom knockout.js binding for applying jQuery Plug-ins to Html nodes:

- <input type="button" value="my button" data-bind="jqplugins: ['button']"/>

- <input type="button" value="my button"

- data-bind="jqplugins: [{ name: 'button', options: {label: 'click me'}}]"/>

The binding name is followed by an array whose elements may be either simple strings, in case there are no plug-in options, or objects with a name and an option property. As you can see in knockout.js bindings are contained in the Html5 data-bind attribute.

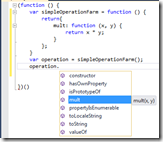

Below the JavaScript code that defines the jqplugins custom binding:

- (function ($) {

- function applyPlugin(jElement, name, options) {

- if (typeof $.fn[name] !== 'function') {

- throw new Error("unrecognized plug-in name: " + name);

- }

- if (!options) jElement[name]();

- else jElement[name](options);

- }

- ko.bindingHandlers.jqplugins = {

- update: function (element, valueAccessor, allBindingsAccessor) {

- var allPlugins = ko.utils.unwrapObservable(valueAccessor());

- var jElement = $(element);

- for (var i = 0; i < allPlugins.length; i++) {

- var curr = allPlugins[i];

- if (typeof (curr) === 'string')

- applyPlugin(jElement, curr, null);

- else {

- applyPlugin(jElement,

- ko.utils.unwrapObservable(curr.name),

- ko.utils.unwrapObservable(curr.options));

- }

- }

- }

- }

- })(jQuery)

The code above enables the use of all available jQuery plug-ins on all knockout.js based architectures, so that we can move to advanced client architectures based to knockout.js without renouncing to our favorite widgets and CSS/JavaScript frameworks like jQuey UI, Bootstrap, jQuery Mobile, and Zurb Foundation.

As a next step we may pass from storing the whole state of a single task, to storing the whole application state on the client side, which implies that the whole application must live in a single Html physical page(otherwise the whole state would be lost). Similar applications are called Single Page Applications.

In a Single Page Application Virtual pages are created dynamically by instantiating client templates that substitute the Html of any previous virtual page in the same physical page. The same physical page may show simultaneously several virtual pages in different areas. For instance, a virtual page might play the role of master, and another the role of detail page.

Most of Single Page Application frameworks have also the concept of virtual link and/or of routing, and may connect the virtual pages to the browser history, so that the user may navigate among virtual pages with usual links and with the browser buttons.

But… why re-implementing the whole browser behavior inside a single physical page? What are the advantages of Single Page Applications compared to “multiple physical pages applications” based on Client View models?

In general having the whole application state on the client side reduces further the need to communicate with the server, thus increasing the responsiveness to the user inputs. More specifically:

- Once the client templates needed to create a new virtual page have been downloaded from the server further accesses to the same virtual page become very fast. On the contrary, loading a complex client model based page that is able to store the whole state of a task may be time consuming, so saving this loading time improves the user experience considerably.

- The state of a previously visited virtual page may be maintained so that the user finds the virtual page in exactly the same state he/she left it. This improves the cooperation between different tasks that are someway connected: the user may move forth and back between several virtual pages with the browser buttons while performing a complex task without loosing the state of each page.

- The same physical page may contain simultaneously several virtual pages in different areas. Thus, the user may move forth and back between several virtual page in one area, while keeping the content of another area. This scenario enables advanced form of cooperation between virtual pages.

- The whole Single Page Application may be designed to work also off-line. When the user has finished working the whole application state may be saved in the local storage and restored when he/she needs to perform further changes, or when he/she can go on-line to perform a synchronization with the server.

The main problem Single Page Application developers are faced with is keeping a large JavaScript codebase modular and maintainable. Since virtual pages are actually client templates <-> ViewModel pairs, the concept of virtual page itself has been conceived in a way to increase modularity. However several virtual pages need also a way to cooperate that doesn’t undermine their modularity and the independence of each virtual pages from the remainder of the system.

In particular:

- Each virtual page definition should not depend on the remainder of the system to keep modularity, which, in turn, implies that virtual pages may not contain direct references to other external data structures.

- Notwithstanding point 1, some kind of cooperation that doesn’t undermine modularity, must be achieved among model-view pairs and among model-view pairs and the application models. A modular cooperation may be achieved by injecting interfaces that connect each model-view pair with the external environment as soon as a model-view pair is added to the page.

- Pointers, to data structures contained inside each virtual page should be either avoided or handled by resource managers to avoid they are used when a virtual page has been released or when it is not in an active state.

Separation is ensured someway by the concept of ViewModel itself. Durandal.js uses AMD modules to encode ViewModels. AMD protocol is a powerful technique for both loading dynamically and injecting other code modules that the current module might depend on and consequently for handling a large JavaScript codebase. However, the dependency tree is hardwired, so the injection mechanism is more adequate to inject code than dynamic data structures that might depend on the state of the ongoing computation. Accordingly, the full achievement of point 2) requires an explicit programming effort. Angular.js uses a custom dependency injection and module loading mechanism. That mechanism is easier to use, but it is less adequate for managing large codebases (in my opinion , not adequate at all). However, the fact that the injection mechanism is less structured make easier the injection of dynamic data structures when a model-view pair is instantiated.

In general most of frameworks ensure separation with some kind of cooperation, but no frameworks offer a completely out-of-the-box solution for point 2, and an out-of-the-box solution form managing the lifetime of pointers that have been injected into model-view pairs to ensure an adequate cooperation in the context of the ongoing computation (point 3). More specifically, the lifetime of pointers to AMD modules(or other types of dynamically loaded modules), that have been injected are automatically handled, but there is no out-the-of-the-box mechanism for managing pointers that a model-view pair might have to data structures contained into another model-view pair, so the developer has the burden of coding all controls needed to ensure the validity of each pointer, in order to avoid the use of pointers to data structures contained in model-view pairs that have been removed from the page.

The need for a more robust solution to problems 2 and 3 is among is among the reasons that pushed me to to implement a custom Single Page Application framework in the Data Moving Controls suite. The Data Moving SPA framework (see here, and here) relies on contextual rules that “adapt” each virtual page that is being loaded to the “current context”. Where the “current context” includes both interface implementations that connect the virtual page to the remainder of the system and information about the current state of the application, such as, if the user is logged or not, the current culture (that is the browser language and culture settings), and so on. Contextual rules are used also to redirect a not logged user to a login virtual page and to verify if the user has the needed authorizations to access the current virtual page. The interface implementations passed by the contextual rules to the virtual page View Models include also all resource managers needed for sharing data structures among all application virtual pages safely. Another communication mechanism is the possibility to pass input data to any page that is being loaded. Such input data are analogous to the input data passed in the query string. In fact, this input may be included also in virtual links.

Another big challenge of Single Page Applications is the duplication of code in both client and server side. In fact, the same classes, input validation criteria, and other metadata must be available on both client and server side, and when the languages used by the two sides are different, this become a big problem. The Meteor framework uses JavaScript on both server and client, and allow code sharing between the two sides. The main price to pay for this solution is the use of a language that is not strongly typed also on the server side. In the Data Moving SPA we faced this problem by equipping SPA server with dynamic JavaScript files implemented with Razor views. This way JavaScript data structures may be obtained by serializing in JavaScript their equivalent .Net data structures.

Another important problem all SPA must solve is the data synchronization between Client and Server. Durandal.js works quite well with Breeze.Js that offers some synchronization services for the case the server may be modeled as an OData source. Breeze.Js may be adapted also to most of all other SPA framework, but this solution is acceptable only if there is almost no business logics between the client and the server side database. In fact, only in this case the server API may be exposed as an OData source only, with no need of more complex communication.

Meteor, takes care of sever/client synchronization in a way that is completely transparent to the developer. A similar solution facilitates the coding of simple applications, but may be inadequate for complex business systems that needs to control explicitly communication between client and server.

The Data Moving SPA framework offers retrievalManagers to submit a wide range of (customizable) queries(that includes also OData queries) to the server, while viewModelUpdatesManagers and updatesManagers take care of synchronizing a generic data structure with the server in a transactional fashion, by taking into account both changes in several Entity Sets (additions, modifications, and deletes), and changes in temporary data structures(core workspaces). As a result of the synchronization process they may return either errors that are automatically dispatched in the right places of the UI, in case of failure, or remote commands that apply modifications to the client side data structure to be synchronized with the server. While the synchronization process is completely automatic, the developer has full control on which data to synchronize, and when to synchronize them, and also the possibility to customize various part of the process.

That’s all! This post ends the short series about JavaScript intensive web application. This series is no way a tutorial that describes extensively all details of the techniques that has been discussed, but just a guide on how to select the right technique for each application and on how to solve some architectural issues that are not usually discussed in other places.

Stay tuned!

Francesco

Tags: Ajax, Single Page Applications, JSON