Last week, Microsoft released the first production-ready version of Blazor WebAssembly, version 3.2. As typical the release was announce during Microsoft Build. This is the first of a series of posts about Blazor WebAssembly. These posts will not be tutorials, since Internet is already full of Blazor tutorials, so there is no need to add further ones. Instead these posts will offer original solutions to common and important problems of Blazor programming. Readers interested in quick starts and tutorials can find them in Blazor official documentation since it is totally organized in a tutorial-like fashion.

These first post is about state management and error recovery. You will learn how to let several Blazor pages cooperate during an overall task execution, and how to avoid the user looses his previous work when an irreversible error occurs before data are sent to the server. As discussed below the solution I propose is quite different form the ones proposed by others, and has the advantage of requiring just a minimum code overhead. So you can adopt it with a minimal coding effort.

Where to store your state

Blazor is all about components, that are continuously and automatically created, updated and destroyed while the user interacts with the application. These means that you can use them just for displaying and processing data, but not for storing state information, since that information might be lost if the component is destroyed an re-created during a rendering operation.

Interfering with the automatic Blazor rendering to prevent its destruction, is possible, but in general it is not advised as a general solution to the problem of maintaining a reliable state. In fact, disabling component update/destruction is possible only if we are sure that the HTML handled by the component will not change during some processing. That is, it is a means to improve component performance, and not a way to freeze information contained in the component.

However, there are some constraints on when and how a component might be destroyed, more specifically components may be destroyed only if there are big changes in their ancestor components, that is in the hierarchy of components containing them. The main consequence of this, is that Blazor pages can be destroyed only when the user changes page because the user clicks a link, or activates in some other way Blazor native navigation logic.

Accordingly, if a whole task is confined within a single page all state information of that task con be stored in the page. The overall page state can be made available to all components in the page, and to their sub-components by cascading it with a “CascadingValue” tag that encloses all page components:

<CascadingValue IsFixed="true" Value="myState">

@*

all page components here

*@

</CascadingValue>

The “IsFixed“ parameter set to true prevent changes propagation in case the instance contained in myState property changes, but improves a lot performance.We can avoid to change the value of “myState” if we define a container class that remains tied to the page for all its lifetime, and reset/start a new state instance by changing just the content enclosed within this container:

public abstract class StateContainer

{

public abstract object RoughState {get;}

}

public class StateContainer<T> : StateContainer

{

public T State { get; set; }

public override object RoughState => State;

}

It is a best practice to define a non-generic super-class when defining similar generic utility classes.

Once state has been put in place this way, page components may interact with “myState” either by having its properties/sub-properties as parameters, or by defining a “CascadingParameter”:

@code{

....

[CascadingParameter]

StateContainer<T> pageStatus { get; set; }

}

So, in case a whole task remains confined within a single page the above setup solves the state management problem…..but, …but:

- What if a task spans more pages?

- What if the user want to move to another page, for some reason, and then he want to resume its previous task?

- What if an irreversible error suddenly crashes the application?

In all cases above the solution based on a page-maintained state fails! Once left the page the state is lost! Leaving the page and returning back to the same page clears the page state!

Problems 1 and 2 can be easily solved by defining the state shared between one or more pages as a singleton in the Dependency Injection engine as shown below:

public static async Task Main(string[] args)

{

var builder = WebAssemblyHostBuilder.CreateDefault(args);

builder.RootComponents.Add<App>("app");

builder.Services.AddSingleton <StateContainer<MyStateClass>>();

After that, each page that operate on that state needs just to inject this singleton with the simple declaration below:

@inject StateContainer<MyStateClass> myState

After that “myState” can be used to pass parameters to all components in the page. As with “CascadingValue”, some components can also inject themselves the state without being passed parameters by the Blazor page.

Having encapsulated the actual state inside the “StateContainer<T>” container we can remove it when a task is completed and replace it with a new fresh copy, so the user can start a new task.

However, also sharing all state objects as singletons defined in dependency Injection engine doesn’t solve the problem of loosing all information in case the Blazor application might crash, since state information are held in volatile memory. We analyze this problem in the next section.

Error Handling and State Recovery

Since unlikely Asp.net Core, Blazor WebAssembly has no centralized handling of exceptions any exception thrown during a component rendering can crash the application, causing the whole application state be completely lost.

In order to overcome this problem many authors propose to take advantage of any opportunity (form submit, field successful validation, etc,) to save the application state in some permanent memory, either locally or on the server.

However, by performing continuous communications with the server we would renounce to most of the advantages of Single Page Applications, such as fast response to all user actions, less load on the server, and a more simplified and modular server application layer.

In general, interleaving business logic with continuous saving operations would undermine code modularity, and the single responsibility principle, producing difficult to maintain “spaghetti” code.

Modularity, could be recovered by encapsulating the whole save logic in all form submit operations or in the getters and setters of state objects properties.

The first option is easily achieved by implementing a form component that automatically save on disk state information after each successful submit. Modern browsers offer several options for saving information to disk with no need to send them to the server, such as IndexedDb, and LocalStorage, but notwithstanding this, this approach appears not very appealing, since it is not clear when information saved on disk can be deleted and which rules to use for deciding when to recover memory state from the disk. In a few words, we have two versions of each state, but we have no well defined rules on how to synchronize them. In fact, it is not clear when a task is finished and all associated disk information can be deleted to avoid they are used also in the next task.

The problem with the above approach is that we have no explicit representation of tasks, but we attach information saving to forms that know nothing about the overall business logic.

Hacking each object property to a permanent structure on disk avoids having two different versions of the same state, since information is not saved at all in memory, but has other heavy drawbacks, namely:

- Performance overhead due to the continuous disk operations.

- The only way to map unique in-memory instances to unique disk instances is to assign an unique string or numeric Id to each state object. While instances that need to be sent to the server already have their unique keys, this way we are forced to assign primary keys also to intermediate results of client processing.

- While in-memory instances that are not used anymore are automatically garbage collected, there is no easy way to garbage collect their images on disk. Moreover, since user can close the application by simply closing the browser, the only chance to clear old unused disk images is when the application is started, but the application has not enough information to decide what to do when it starts. In fact, since we don’t intercept unhandled exceptions, we can’t store somewhere that the application crashed, and that at next start state must be recovered. Moreover, since the save automatism is not tied to actual tasks start and end, the application has no way to understand by inspection that disk state contains incomplete tasks that must be recovered.

- All properties of state classes must be redefined manually so that they call adequate disk write and disk read routines…. This is an actual nightmare! This disadvantage could be overcome by hacking input fields on blur events instead of class properties, but it is not easy to inform onblur events about the id of the object being processed. Moreover, input fields are not the only way the application can change the properties of state objects.

The above discussion about error recovery strategies clarifies that any efficacious state recovery strategy needs two important capabilities:

- The capability to recognize when the application crashes, and to trigger adequate adequate actions, in that case.

- An explicit representation of tasks starts and completions. In fact, a state must be recovered only if its associated task has been interrupted by an application crash.

While Blazor WebAssembly has no centralized exception handling logic, all errors are logged to .Net Core standard logging system, so we can implement ourselves a custom centralized error handling with the help of a custom logger, that is, by providing a custom implementation of the two interfaces below:

public interface ILogger

{

IDisposable BeginScope<TState>(TState state);

bool IsEnabled(LogLevel logLevel);

void Log<TState>(LogLevel logLevel, EventId eventId,

TState state, Exception exception,

Func<TState, Exception, string> formatter);

}

public interface ILoggerProvider : IDisposable

{

ILogger CreateLogger(string categoryName);

}

“ILoggerProvider” is a provider whose only purpose is to create an implementation of ILogger, when it is required, possibly passing it instances of other classes taken from the Dependency Injection engine.

When an unhandled exception occurs the “ILogger.Log” function is called and the exception is passed as third argument. However, since “ILogger.Log” might be called also to log simple information, we need to check also the first argument (“logLevel”) that contains the log severity level. More specifically, a log call inform us about an unhandled exception only if :

logLevel >= LogLevel.Error

Thus, our custom logger must perform error handling actions just in this case.

In the next section we explains in detail how to perform centralized error handling with a custom logger.

Implementing Centralized Error Handling

As a first step let create a new Blazor WebAssembly project. In order to operate and debug Blazor WebAssembly 3.2 you need a Visuals Studio 2019 installation that is updated at least to version 16.6. You can download the last free version of Visual Studio here.

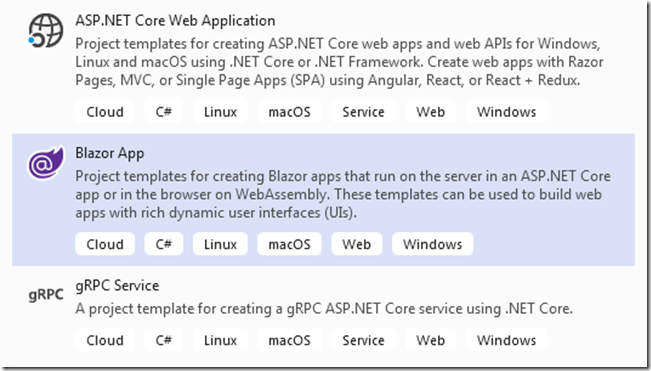

Let open Visual Studio and choose “Create new project”. Then, select the Blazor project template, as shown below:

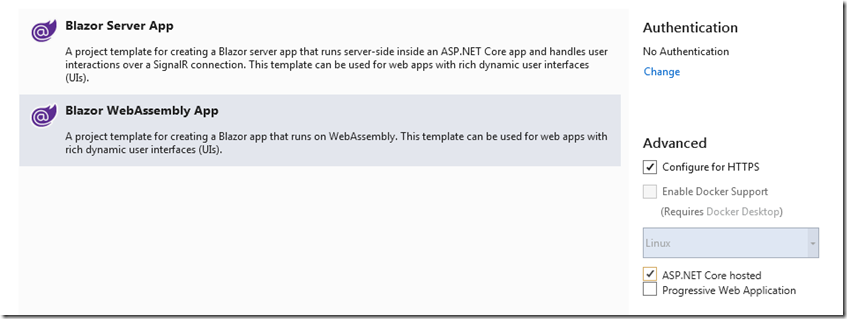

When the project name appears, name the project “StateManagement”. Finally, in the last screen select “Blazor WebAssembly”, and ensure that both “Configure for Https” and “Asp.net Core hosted” are checked while “Enable Docker support” and authentication are both disabled, as shown below:

Once everything is ready run the project to verify that the initial WebAssembly example application runs.

If everything is ok, we can move to the second step: adding a library project where to implement our error handling and state management, so we can reuse it also in other projects,

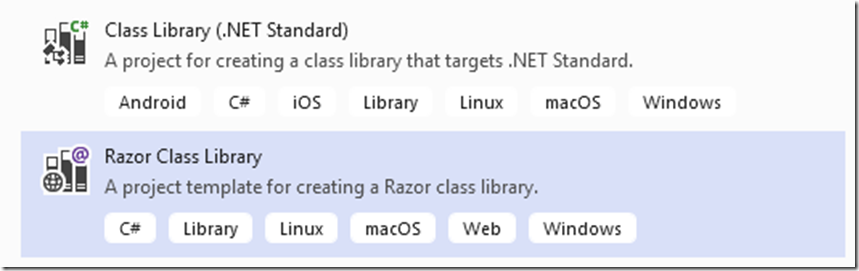

Right click on the root solution in the “Solution Explorer” tab and select “Add new project”, after that select the “Razor Class Library” template:

Then name the library “StateManager”. In the final screen, ensure that “Support pages and views” and all other options are unchecked.

After the library project is ready right click on the dependencies of the “StateManagement.Client” WebAssembly project and add the library project as a dependency.

Now we are ready to start writing our code!

Implementing the error logic

We will first implement a general purpose error handling, logic, and then we will implement our custom logger to trigger the error handling process. We an opt in for the simple interface shown below:

public interface IErrorHandler

{

event Func<Exception, Task> OnException;

Task Trigger(Exception ex);

}

Basically, a centralized place where all application parts that need to be notified about an unhandled exception can register their handlers with a “+=” on the “OnException” event. On the other side “Eceptions detectors” like our custom logger can run all these handlers by calling the “Trigger” method of this interface.

The interface implementation is straightforward:

public class DefaultErrorHandler: IErrorHandler

{

public event Func<Exception, Task> OnException;

public async Task Trigger(Exception ex)

{

if (OnException != null) await OnException(ex);

}

}

Let define both the interface and its implementation under a newly created folder of our library project called “ErrorHandling”.

The basic idea is to register both the interface and its implementation in the dependency engine as a singleton with something like:

services.AddSingleton<IErrorHandler, DefaultErrorHandler>();

But we will do this later, by encapsulating this instruction inside an “AddStateManagement” extension method.

Nest step is the implementation of the ILogger.

Implementing the custom logger

Let name our custom logger “ErrorRecoveryLogger”, and let place it under the same “ErrorHandling” folder we defined before.

The code is quite simple, but contains some fake stuffs since we don’t need all “ILogger” functionalities:

public class ErrorRecoveryLogger : ILogger

{

IErrorHandler errorHendler;

public ErrorRecoveryLogger(IErrorHandler errorHendler)

{

this.errorHendler = errorHendler;

}

public IDisposable BeginScope<TState>(TState state)

{

return new FakeScope();

}

public bool IsEnabled(LogLevel logLevel)

{

return logLevel >= LogLevel.Error;

}

public void Log<TState>(LogLevel logLevel, EventId eventId, TState state, Exception exception, Func<TState, Exception, string> formatter)

{

if (logLevel < LogLevel.Error) return;

errorHendler.Trigger(exception);

}

}

public class FakeScope : IDisposable

{

public void Dispose()

{

}

}

The “FakeScope” class is an IDisposable fake class implemented just because “BeginScope” must return an IDisposable. We coded a fake implementation of this method since we don’t need it.

“IsEnabled” must return true just when “loglevel” is at least “LogLevel.Error”, to inform all callers that this logger is interested just in errors.

When the “Log” method is called we verify that “logLevel” is at least “LogLevel.Error” and then we call the “Trigger” method of the previously defined “IErrorHandler” interface.

This interface is passed in the logger constructor that stores it in a private property.

The “ILoggerProvider” implementation just creates our custom logger and passes it the “IErrorHandler” interface:

public class ErrorRecoveryLogProvider : ILoggerProvider

{

IErrorHandler errorHandler;

public ErrorRecoveryLogProvider(IErrorHandler handler)

{

errorHandler = handler;

}

public ILogger CreateLogger(string categoryName)

{

return new ErrorRecoveryLogger(errorHandler);

}

public void Dispose()

{

}

}

“IErroHandler” will be passed in the constructor of our “ILoggerProvider” by the Dependency Injection engine, and our “ILoggerProvider”, in turn, will use it to create its loggers.

Putting everything in place with an Extension method.

As a final step of our error handling logic implementation we define an “IServiceCollection” extension method that we will call form inside the Blazor application “main” in oder to setup the whole error handling logic we designed.

Let create new “Extensions” folder in the library project and let add it our extension class called “StateHandling.cs”. The implementation is as follows:

public static ILoggingBuilder

CustomLogger(this ILoggingBuilder builder)

{

builder.Services

.AddSingleton<ILoggerProvider, ErrorRecoveryLogProvider>();

return builder;

}

public static IServiceCollection AddStateManagemenet(

this IServiceCollection services)

{

services.AddSingleton<IErrorHandler, DefaultErrorHandler>();

services.AddLogging(builder => builder.CustomLogger());

return services;

}

AddStateManagement adds our “IErrorHandler” implementation to the Dependency Injection engine, and then add our custom logger

to all other pre-existing loggers by calling “AddLogging”. The logger is added with the “builder pattern”, that is by defining its setup with a “ILoggingBuilder” extension. This is a standard pattern to create loggers.

Testing Our Centralized Error Handling

In order to verify that our error handling logic works let follow the following steps:

- Let add a call to AddStateManagemenet() in the Program.cs file of the “StateManagement.Client” Blazor WebAssembly project , as shown below:

public static async Task Main(string[] args)

{

var builder = WebAssemblyHostBuilder.CreateDefault(args);

...

builder.Services.AddStateManagemenet();

await builder.Build().RunAsync();

}

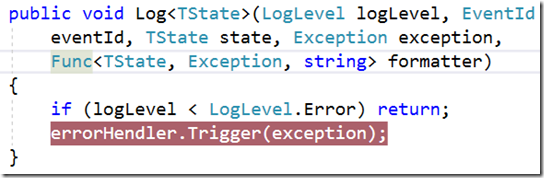

- Then, let place a breakpoint in our custom logger implementation on the line where it calls the “Trigger” method of “IErrorHandler” interface, as shown below:

- Finally, let open the “Counter.blazor” page that is under the pages folder of the “StateManagement.Client” WebAssembly project, and modify the “IncrementCounter” method so that it throws an exception when the increment button is clicked, as shown below:

private void IncrementCount()

{

throw new Exception();

currentCount++;

}

- Let run the solution, then go to the counter page, and click the “Click me” button.

When the button is clicked the Blazor application UI signals the errors, and immediately after, the breakpoint we places is hit! Our logger logic was able to

detect the unhandled exception, and to inform our centralized error handling logic.

That’s all for now!

In the second part of this article we will see how to share state information among Blazor pages, an how to save on disk and then how to recovery the state of all uncompleted tasks in case of exceptions.

Ckick here to access the whole code on GitHub!

Francesco

Tags: Error Recovery, Blazor